A bug bounty program is a deal in which companies (usually medium-large) offer rewards (in most cases monetary) to people who responsibly disclose and report bugs or -in the context of this article- vulnerabilities related to their systems or infrastructure. This phenomena has seen a relatively big increase in the last years and it is expected to grow even more in the upcoming 5-10 years. In this article, I will try to explore some of the implications of these programs, both from the technical and -most importantly- the political point of view.

The Benefits of Bug Bounties Programs

Let’s get this out of the way first. Bug bounty programs have advantages, they provide some benefits that are tangible and cannot be completely ignored. For companies, they are a way to have bugs reported and disclosed so that they can be fixed, rather than sold and/or abused. The idea is simple: if you have a better (and safer) financial incentive to report the bug compared to selling it on the black market or abuse it yourself, it’s reasonable to think you will do that. This also means that people who accidentally find some bug will have the means to report it without having to worry whether they can get in trouble for it (which used to be the case, and still is, in many instances).

For security researchers/white hat hackers/security enthusiasts etc., bug bounty programs can become a way to somewhat monetize the activities that would otherwise have been carried out purely as a hobby. In addition to this, such programs allow researchers to play with real systems in a legal way, rather than having to work purely on a lab environment (which makes it arguably less fun).

Obviously other folks will have additional arguments to add to the list, but I hope that what I listed so far is reasonable enough to demonstrate that my objective in writing this piece is not to fully condemn bounty programs nor declare their futility.

The Technical Downsides

Let’s start now analyzing the downsides of these programs. First and foremost, the relevancy of such downsides will depend on how a given company and organization will build the program and, similarly, how an individual performs his or her research; however, I will try to point to some specific factors that cause a negative impact in having (or participating in) a bounty program.

For companies it is absolutely critical to have a good disclosure process that allows researchers in good faith to report safely bugs without having to worry for their legal safety and without having to jump through many hoops (usually an e-mail ping-pong with customer support). However, bug-bounties and disclosure programs in general need to be perceived exactly for what they are: a nice-to-have way to have bugs reported, on the part of their systems which is more exposed publicly. The problems start when companies will start considering a bug-bounty program as a security control. I know, not all companies do that, but some do and in addition they are encouraged to do so.

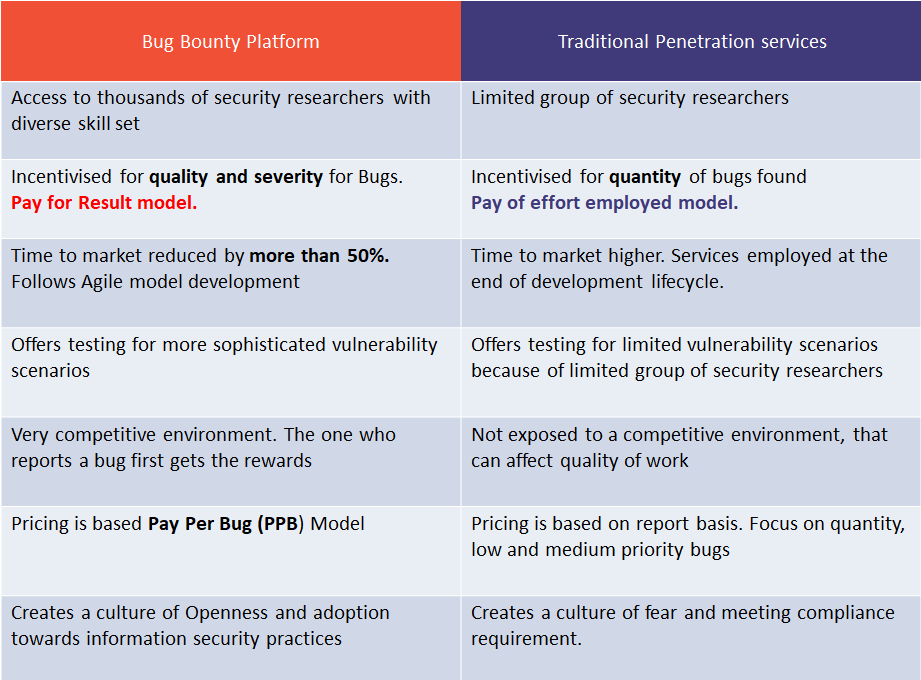

Already a couple of years ago I found a picture that was in my opinion representing this exact view:

I stumbled across this image in one of the many (too many) low-efforts posts that constitute virtually the total of LinkedIn posts. Of course, this post was created by a company that offers a bug-bounty platform (more on this later), so it’s not surprising that they would advertise their own business. However, the growing number of platforms that organize bounty programs is trying to create this narrative, that if you create a program you are basically subscribing for a continuous penetration test of your systems, and if that wasn’t enough, you have to pay only when bugs are actually found (and even in those cases, not always!).

It’s clear that this is absolute nonsense, and a quick Google search will show an even larger number of posts made (usually) by companies that offer penetration tests that stress the opposite arguments (bug bounties are unstructured, partial etc.).

A small de-tour about pentests

Allow me a small rant about penetration tests. I have worked in a company that underwent at least 4 or 5 penetration tests in the last years. Usually they lasted a couple of weeks and costed from 10 to 20k Euro. The quality of most penetration tests is abysmal. Some companies call a penetration test having one guy, possibly not even very experienced, running Nexpose (or similar tools) against your infrastructure, exporting the results to CSV and sending the report to you. Using this kind of penetration test to oppose a bug-bounty program is obviously as nonsense as the argument that I discussed earlier (on why to prefer a bug-bounty program).

The technical Reasons why Bug Bounties are inherently crippled

I mentioned earlier that telling a company to prefer (as in, replace with) bug-bounty programs to penetration tests is nonsense, but I didn’t articulate why. So, here are some of the reasons:

- The scope of a bug-bounty program is obviously limited to the public-facing systems and infrastructure. You don’t provide VPN credential to bounty hunters to test your internal systems.

- Most of the findings (for reasons that I will discuss shortly to cover the perspective of the hunter) are found through automation, not much differently from how the “pentest” are sometimes carried out by the average security firm. Automation for security audits has its known limitation and it is very rarely sufficient to discover logical bugs that are the result of multiple issues.

- Bug bounties programs attract script kiddies who learn to write ‘alert(1)’ in every box (often without understanding the result) and also people who are just after money (which is not bad per se, but it is bad when people want the money without the bugs). This I am sure has a fun component (I am sure plenty of reports are hilarious), but it has also some negative consequences for an organization. For example, each report should (whether this happens or not, it’s hard to say) be validated, triaged, and possibly responded to. This takes time (and is not fun work either) for security professionals that could use their time in a better way.

- There is hardly any prioritization. Bounty hunters will not focus specifically on high-impact vulnerabilities or systems, and you might get an unbalanced number of reports (and therefore also testing coverage) for low-criticality vulnerabilities in order to get any bounty, rather than a focus of what would cause actual damage to your organization first.

Once again, all this is not to say that bounties do not have any advantage for organizations, but I want to stress that the erratic and incomplete nature of bug-bounties are not a security control per se. At most, they are a control for the risk “some person outside the company finds a bug in our systems and doesn’t know/has incentive to report it”. That’s it, this is what organizations should aim to address with bounties.

The Security Researcher perspective

Now, let’s look at the security researcher perspective. Bug-bounties are sometimes proposed to junior people as a learning opportunity, some occasion to learn tools and techniques. Moreover, they are seen by someone else as a way to make money and finally, for others they are a way to have fun and/or gain ‘street creds’.

In my opinion, the only valid point is the latter: if you do have fun doing it, it makes sense. If you are in for the money of for the learning component, you are much better off doing something else. Before taking the pitchforks, allow me to explain. Let’s start from a premise, which is easily verifiable talking with many people who do bug-bounties: most of the bug hunters a) specialize in one (or maybe a couple) class of vulnerabilities; someone might be specialized in finding XSS, someone else is a sadistic person who likes to experiment with requests smuggling etc., but the bottom line is that usually the recommendation is to get good in one class of vulnerabilities and look only for that. b) Most of the research is automated, at least to filter the scope. Successful hunters rarely work on one program at a given time, dedicating their full time on that. They will rather work on as many programs as they can in parallel, through automation, and then eventually dig deeper where some interesting or suspicious finding pops up.

This premise is useful to explain why bounties are a bad (or not optimal) way to learn or to make money. First, while learning it is extremely reductive to focus on one class of vulnerabilities only (for obvious reasons), so if you want to learn, it’s likely you want to cover a broader range of subjects. This wouldn’t be a problem, except for the fact that most real-life systems are too complex, there is too much noise, to practice security testing. Besides, it’s much harder to practice the exploitation/discovery of vulnerabilities on systems that it’s not known whether they are vulnerable or not. It is much more efficient to learn XSS on a lab-scenario, where you do have access to the ‘application side’ as well, rather than just throwing payloads at a system without a proper way to gather feedback or inspect the results (as in, didn’t work because I am doing the encoding wrong or the application is simply not vulnerable?).

Let’s now talk about the money: searching online it’s not uncommon to find young researchers asking suggestions on how to become full-time bounty hunters or recommendation to complement one’s salary with bug hunting. One thing that all these questions have in common is the amount of people that answer that it’s a terrible investment to do bug bounties with the goal of making money. The arguments are very simple:

- It is absolutely not guaranteed that you will find a bug.

- If you find a bug, it is very likely it will be a low (or medium) severity bug.

- It is absolutely possible that the bug you found has already been reported (and in most cases, this means you won’t get paid).

- In some cases it will take up to months to get a bug confirmed and the bounty paid.

- There are many ways to shoot yourself in the foot, and perform some action that -at the discretion of the company- will disqualify you from the program, even if the bug is valid and confirmed.

- It can take you hundreds of hours to find a single bug.

Looking at some data published by Portswigger not so long ago, the bulk of the users of HackerOne (one of the major bug bounties platforms) makes less than $20000 a year. This is probably the number before taxes (because in the T&C of HackerOne is clearly stated that taxes are your responsibility) therefore it’s reasonable to assume that the actual number is 20-40% lower, somewhere around $15000. Most of the researchers make less than this, which means that the median is probably lower. According to another article the top 1% of hunters earns on average $35000, to which again, we probably need to subtract income taxes.

At this point, it really boils down to how much time is invested into this work, and (something that is very often forgotten) the opportunity cost of those hours. Do you have an automated setup which with minimal supervision and work can earn you $10-15k in a year? That sounds a great investment. Are you looking for work and you are thinking of becoming a full-time hunter? Chances are, money-wise you will be earning way, way, way less than you would with a regular (in most cases even part-time) job. Sure, there are some perks, like total flexibility etc., but there is also a high degree of instability. It’s not easy to go to a bank for a mortgage and present your HackerOne profile saying “don’t worry, next year I am doing even better”, especially since -once again- you are competing against a plethora of people who most likely: a) are specialized in different classes of vulnerabilities, and b) have already automated most of the setup, meaning that they will most likely pick the low-hanging fruits, leaving you more complex bugs that require more time. All this doesn’t apply for countries with lower salaries, where $10000 is already a very high salary. I hope that remote work will present those people with even better opportunities, but if that’s not the case, then sure, making bounties in that case can be worth the effort.

You might also think that finding some bug can be a nice addition to your CV or ‘street creds’. In fact, there are still plenty of programs that “pay” you in “points” or by adding you to some hall of fame. As a person who participated in the hiring process for security professionals, I believe that a bounty does give you some points, but absolutely nothing that a certificate, a degree or simply a nice conversation where you demonstrate understanding of a given topic doesn’t give as well. It could lead to good consideration if it’s a very complex vulnerability that demonstrates your persistence and technical capabilities in a wide range of topics (chaining multiple vulnerabilities for example, although this is not possible in many programs), but definitely it won’t blow anybody’s mind seeing you discovered some reflected XSS in the search page of a minor website, or 20 XSS in various sites.

To sum it up, I believe that for a small percentage of people, bounties are a nice-to-have source of income if -and only if- there is minimal amount of work put into it. They are not the most optimal way of learning or to make money for the majority of the people. If you are out of work and you are looking to earn some money before you find another job, I would argue that it is a much, much better investment to spin up a couple of virtual machines and learn about some popular topics rather than investing hundreds of hours (because this is most likely what takes an inexperienced person) in the hope of getting some $200-1000 bounty, without learning too much in the process. I would argue that a $50 book read and practiced cover-to-cover (for example this in 200 hours will give you a way bigger edge in job hunting (for an appsec position, for example) than having 2 medium bugs found within the same time (and I am being overly optimistic here).

The Social and Political aspects

So far we have discussed mostly technical aspects, but I think it’s equally, or maybe even more, important to discuss the social and political implications of these programs. Bug-bounties in fact do not exist in a vacuum, they exist within a specific social context made of workers and organizations with different (and often) contrasting interests, made of budgets (in terms of money and time) and so on.

The Power Unbalance

My first and main issue with these programs is that all the workers’ rights are completely destroyed. This is one of the selling point of bug-bounties according to the picture I included at the beginning: you don’t need to pay for the time spent, you pay for the result. This means that you are not paid for the work you do, you are paid if -and only if- the outcome of your work is deemed useful by the company at its sole discretion. The company decides that your bug is somehow not in scope for the program? Tough luck. The company already got that bug reported (in many cases, you wouldn’t know)? Tough luck.

You, as a person doing actual work that benefits the company, have no right, whatsoever. I also want to remind here that finding no issues in a given system is already a valuable result for the company. As an organization, you will get at least some level of confidence that no trivial bug exists (for a given class) in a given part of my systems, at a given point in time. Exactly like having a clean penetration test result is a very valuable (and pursued) result.

In case of bounties, you are on your own. You might spend hundreds of hours testing the systems of an organization, a task that by itself would cost 2 months of salary for a professional, and you are doing it for free. Nothing guarantees you that that time will be actually paid, and if it’s going to get paid, it might be paid an extremely low amount for the hours invested.

This power unbalance is not just perceived by me because of my political views, it has very concrete implications and visible symptoms. There are many horror stories from security researchers, from a classic Facebook story, in which a “misunderstanding” lead to Facebook reaching to the researcher’s employer, to Apple refusing to pay and many others. The common denominator here is that you have no guarantees whatsoever not only that you are going to find something, but even that you will be paid for that something, or that you will be paid fairly for it. The power is completely in the hands of the company running the program.

Another aspect with this unbalance is the Intellectual Property right. For example, you might think that if the company decides without (in your opinion) proper motivation not to pay you, to underpay you or simply ignores your report, you might publish (or sell) the findings you reported. This in some cases is not really ethical but it would give some leverage to the researcher and will force the company to attribute proper value to the reported findings. However, if we look for example at HackerOne T&C we will see:

“Subject to HackerOne’s ownership of any HackerOne Property contained therein, the Customer will own all right, title, and interest to each Customer Report. HackerOne hereby grants the Customer a non-exclusive, non-transferable, perpetual, worldwide license to access, use, and reproduce any HackerOne Property included in each Customer Report.”

Similarly, in BugCrowd T&C we see:

“For the purposes of this section, “Testing Results” means information about vulnerabilities discovered on the Target Systems discovered, found, observed or identified by Researchers” and “Target Systems” are the applications and systems that are the subject of the Testing Services.

You hereby agree and warrant that you will disclose all of the Testing Results found or identified by you (“your Testing Results”) to Bugcrowd. Furthermore, you hereby assign to Bugcrowd and agree to assign to Bugcrowd any and all of your Testing Results and rights thereto.”

Basically the moment you report anything, you also give up the intellectual property right for anything you have produced, and you do so unconditionally. This means that you expose yourself legally if you decide to take any action against an unfair treatment (for example, publishing on your own the bug) and of course you potentially jeopardize your ability to continue “working” as a hunter in the future on the same platform. It doesn’t matter that the fault might have been the company’s, you have no protection. I have picked two of the biggest platforms just as an example, but I am sure that the same applies to many if not all the other major platforms as well.

This framing of security research is still an improvement from the criminalization that used to happen not too long ago (and that still happens nowadays), but it is far from an ideal solution or an optimal result.

Platforms

My second issue with these programs, very much linked to the previous, is the fact that the bounty programs, for how they are organized today, encourage the parasitic platform capitalism. What I mean by that is that (again, for how they are built right now) these programs require a middleman which does not directly perform any work, but leeches on the work done both by the company (through some subscription fee) and by the researchers (through a cut in the reward) in order to generate profit. The platform itself is not necessary, once built it has basically no cost (whatever might cost to run a website), but it extracts profit from the work of others.

The issues with Platform Capitalism are much better discussed by Srnicek in his book, which I highly recommend, so I won’t dig too much in why this is a problem.

Why such platforms need to be for profit and -most importantly- why would we need so many of them? We have plenty of public or kind-of-public institutions that deal with Cyber Security. For example, why can’t a public platform be developed, open sourced and then run independently by national CERTs, or maybe even centralized? I personally do not see any notable advantage in using BugCrowd versus using HackerOne or one of the other many platforms, they are functionally identical. The difference is that a for-profit company is behind each one of them, which is part of the reason for the unbalance of power discussed earlier: a for-profit company will always be incentivized to protect the paying customer (which is the company sponsoring the program), while a semi-public institution could be a more impartial referee in potential conflicts. Furthermore, having to sign up to tens of platforms, each with its own rules, is unnecessarily annoying for researchers as well.

Labor

The final argument in terms of the socio-political implication has to do with labor. This is probably the most obvious reason, but doing bug bounties is essentially doing free labor (except if something is found), without a contract and without any guarantee. Of course everyone is free to perform free labor, but it is worth reasoning on the implication that this has as well. bug bounty platforms will push for security done through their programs rather than through penetration tests or security audits. Whether they will have success is debatable, but the implications are pretty clear: we are replacing workers, with a given salary and guarantees, with a number of workers that are not paid for their labor but are paid only for their (not guaranteed) results, and paid in a non-predictable way as well. This is obviously a step back in wealth distribution, it is a step back in stability and as workers, we should actively fight this narrative. In fact, as a security professional, I feel this is my duty as well, and one of the reasons why I decided to write this post on the first place.

One of the most disturbing facts is that the companies that get more of this free labor are the companies that if not “don’t deserve it”, at the very least don’t need it: Google, Facebook, Twitter, Apple, etc. This is because it gives more ‘street cred’ finding a bug in Google than in my blog and probably because they also pay better (on paper) for findings. However, these are companies that have plenty of resources to pay internal teams and are also the ‘innovators’, who maybe should be the ones leading the solution to the problems addressed in this post: how to pay researches for the work they have done, and not for the individual findings this work generated.

I don’t want to sound catastrophist, I don’t believe that tomorrow, or in a year, or in 10 years, companies will fire internal (offensive) security teams and replace them with bug bounties programs. This is not realistic of course. However, it’s possible that the internal teams might be 5-10% smaller than what they could be, because the company for example outsources the assessment of some part of their systems (public web applications, for example), to bug hunters. If this was the case, we are talking about replacing high-paying stable jobs with essentially free labor. We are talking about more pressure on the job market, which in turns will push more people to do free labor to ‘get visibility’ and enrich their CV, and all the similar phenomena way too common already in other fields, from show-business to graphic design, in a vicious circle that will benefit companies and hurt workers.

Conclusion

Within the post I discussed how bug-bounty programs, for how they are run today, are not the best opportunity for learning or to make money. I think that these programs are necessary, in the sense that companies should incentivize researchers to disclose responsibly a bug if they find one, but there are some caveats, which I want to sum up in a few points:

- As a security professional, you should frame the bug-bounty program utility and function in the correct way. Similarly to how you should frame a penetration test in the correct way. A bug-bounty program is useful to give an option to researchers that will find (accidentally or deliberately) a security issue with your systems, and also contributes to the de-criminalization of white hats. They do not represent a security control that makes in any way redundant proper security investments within the company, and an internal offensive security team (for organization of a certain size at least).

- If you are a security researcher and you are looking for learning opportunities, I think that books, labs, CTFs and auditing (Free - as in speech) Open Source projects are a much better way both from your personal point of view and also from the ethical point of view. Rather than wasting 50 hours to find, maybe, some XSS in some Facebook URL, you might spend 50 hours to do some testing for a FOSS project that actually helps people. Who knows, this might also get you a foot in the door of a community, in addition to the fact that you are way more likely to find vulnerabilities there.

- If you are a security researcher and you are looking for money, then unless you are really specialized in some vulnerability class and you have proper automation (or you intend to build it without having to invest too much) in place to look for those bugs in almost complete autonomy, don’t bother. Rest your mind to perform better during your regular work, spend time in whatever other way you want or if you need to really monetize this job, get started on freelancing, teaching, consulting etc.

- Finally, if you are a security researcher and you are looking for fun, bug-bounties might be for you. If you get joy in finding a bug, and if you want to stack ‘street creds’, then you might get the benefits of bug hunting. However, even in this situation, let an Internet stranger encourage you to conciliate the ethical motives with the entertainment: there are plenty of software projects and Tech organizations who do, or try to do, good in the world, spend your time there, rather than doing free labor for companies that could pay an army of security researchers if they wanted to (Facebook, Google, Apple, etc.). You will have fun, you will get your CVEs but you will also help people, not only the organization/team (that most likely doesn’t have the concrete means to pay for security professionals yet) but also the people who use those products. For companies you can pick a tech co-op and for software there are of course so many options that to even scratch the surface, it would take a whole post.

As tech workers, it’s important to consider our work in the bigger context, to consider the implications of our actions, the faults of the current tech environment and to contribute in any way we can to improve it.